Why do we write tests?

It’s a valid question. Tests, like all other code, are a liability – and therefore, they should earn their right to exist.

So, why should we write tests? What’s their raison d’etre?

We write automated tests to continuously verify the behavior of our application as early as possible.

Every word in that sentence matters.

Our tests verify that our application works as we and other stakeholders expect. As professional software developers, we’re responsible for the way the application works. Our goal should be to build high quality software that serves the end user. To do that, we need to ensure the application works as it should.

Of course, any application that survives even a few months of production use will inevitably change. As our application grows due to new and shifting requirements, we want our tests to continue to verify that everything is still working as expected. That’s why they should be automated.

But it’s crucial to understand that we want to verify the behavior of our application – not the internal implementation.

We want to ensure that our application as a whole, as well as each module and function, accomplishes the task that we created it to do. If, instead, we test how it accomplishes those tasks, we risk coupling our tests to the concrete implementation, which increases the cost of changing our application – just the opposite of what we want. That is why it’s often helpful to write the tests first, documenting the behavior in executable form before we’ve even written the implementation.

And we want those tests to run continuously. Each time we change a function, we should run that function’s tests. When we’re finished working on that function, we should run the module’s tests. When we finish a feature, we should run all of our tests. And, of course, before we merge a branch, we should run all of our tests again. That’s why it’s important that tests run fast.

That’s how we catch regressions before they happen – and it’s why we want to verify the behavior of the application as early as possible.

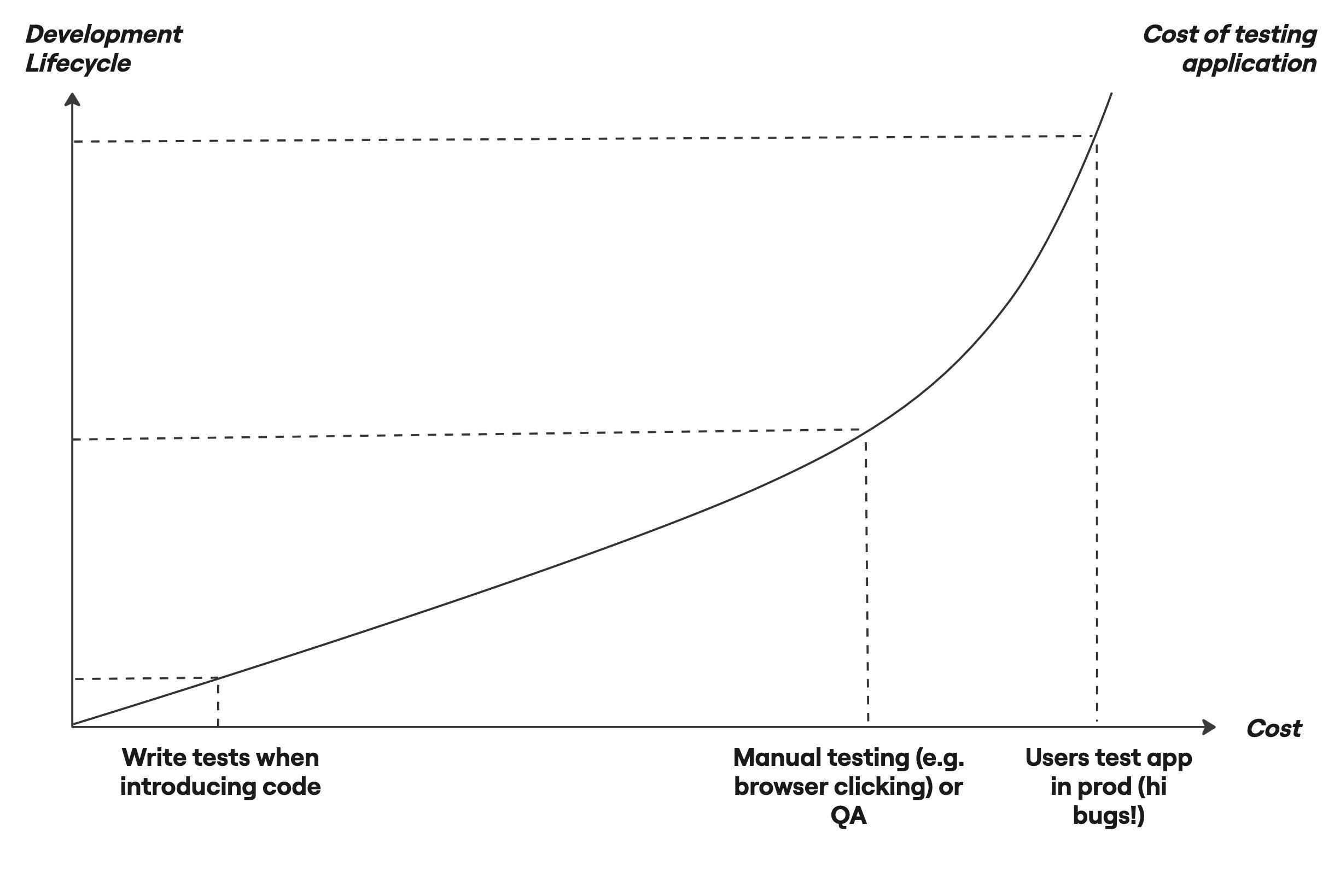

The reality is that everybody tests their applications, regardless of whether or not they write automated tests:

- You can test your application with automated tests (you can even write the test first!),

- You can test your application manually before you deploy it – e.g. clicking on the browser as you develop a feature and once again before deploying to production to make sure things “look” okay (something that is actually slower, less repeatable, and therefore more costly!), or

- You can let your users test your application in production! (here come the bugs).

Each of those are different methods of testing your application. They do so at different points in the development process and at different cost to you.

Automated tests happen early, when developers have most context on the code they’re testing and are therefore the cheapest way to test your application. Testing in QA or manually via the browser happens later (and it’s slower), which increases the turn-around time required to fix any regressions. Finally, having your users test your application in production happens very late and is very costly. It’s expensive to find the bug and fix it, but it is also costly to your company’s reputation.

So, if you want to decrease the cost of testing your application, do it as early as possible in the development process. Do it when you’re first writing the code.